Kubernetes container images signing using Cosign, Kyverno, HashiCorp Vault and GitLab CI

Container images are the crucial part of the applications running in Kubernetes. But how can we make sure that the container images that we build with CI/CD are the same images we run in Kubernetes? How can we forbid running the untrusted images?

In this article I’ll show how to achieve supply chain security using the popular open-source tools:

- Sigstore Cosign is a modern signing and verification tool. I will use it for signing the container images in this article. What I especially love about Cosign is that it’s just a single binary, not a service.

- Kyverno is a powerful policy engine for Kubernetes. It has numerous use cases one of which is image signature verification. It allows to verify images signed with Cosign.

- HashiCorp Vault is a secrets management service. Its transit secret engine (so called encryption-as-a-service) can be used by Cosign and Kyverno to sign and verify image signatures. In this case Vault acts as a trusted Key Management Service (KMS).

In this article GitLab CI will be used as CI/CD tool for a container image build, although you can use any CI/CD you’re comfortable with (Github Actions, Jenkins and so on). I will also use GitLab Container Registry to store container images but you’re free to use any container registry you like.

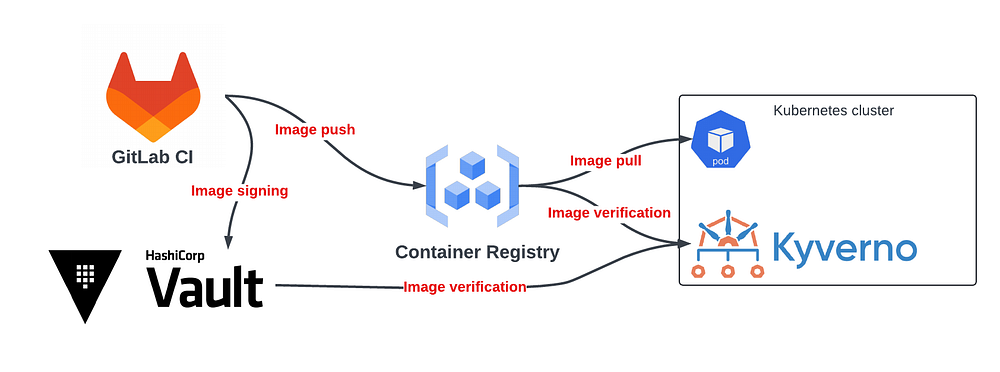

The overall architecture looks like this:

- As a part of the GitLab CI pipeline wewill utilize Cosign in order to sign the container image and upload the resulting image signature to the Gitlab Container Registry. We will use Vault as a KMS provider.

- We will create a Kyverno ClusterPolicy that checks that: 1) Image is pulled from the trusted container registry, 2) It is signed by trusted Vault key. If the image is not signed or signed by untrusted key, the Kyverno will block pod creation (if the ClusterPolicy mode is “Enforce”). Alternatively we can create ClusterPolicy in Audit mode in which case it will allow pod creation but generate a warning event that can be investigated later.

- Each time we build a new image it’ll get new signature uploaded and then verified by Kyverno in Kubernetes. Which means the more frequently we build the image the more image signatures we will have. Ideally we need to set up periodic registry clean up to get rid of outdated image signatures.

Important fact here is that everything happens transparently to the user. The user is not aware that image signature is checked. No modification to existing deployment manifests is required.

You’ll the following components to be installed.

- Kubernetes cluster.

- HashiCorp Vault cluster: https://developer.hashicorp.com/vault/install

- Kyverno: https://kyverno.io/docs/installation/

Vault setup

The first thing you’ll need to do is to create Vault transit encryption key. In this example the key is named cosign :

$ vault secrets enable transit

Success! Enabled the transit secrets engine at: transit/

$ vault write -f transit/keys/cosign

Success! Data written to: transit/keys/cosignNext thing is to define Vault policy that will allow the above key usage. I created the following policy:

$ cat > cosign.hcl <<EOF

path "transit/keys/cosign" {

capabilities = ["read"]

}

path "transit/hmac/cosign/*" {

capabilities = ["update"]

}

path "transit/sign/cosign/*" {

capabilities = ["update"]

}

path "transit/verify/cosign" {

capabilities = ["create"]

}

path "transit/verify/cosign/*" {

capabilities = ["update"]

}

EOF

$ vault policy write cosign cosign.hcl

Success! Uploaded policy: cosignBoth Cosign and Kyverno will need Vault roles with the above policy attached in order to sign (with Cosign) and verify (with Kyverno) image signatures. Both Cosign and Kyverno will need access to the Container Registry storing the image and its signatures.

Cosign will be used as a part of GitLab pipeline. In order to authenticate GitLab pipelines to Vault we can use GitLab JWT provider as described in their article. I won’t cover the GitLab → Vault authentication in this article (otherwise the article will become overly huge).

The next question is how we’re gonna authenticate Kyverno to Vault. Ideally, because Kyverno runs in Kubernetes, we would naturally like to use Kubernetes auth method as the most secure one. However, Kyverno doesn’t yet support Kubernetes auth method for Vault (see Github issue). The only method available to us so far is VAULT_TOKEN environment variable for Kyverno. It’s far from ideal but it is what it is.

Now we need to create a long-lived Vault token with the cosign policy attached.

$ vault token create -policy=cosign -orphan -ttl=8760h

Key Value

--- -----

token hvs.CAESIEGiDhSts4rDwJQw4Twre1IJACXB5h288PWJVgZFMSbcGh4KHGh2cy5NZ09icUxqdmZnYU1wd1VJZGE5M0pDV0k

token_accessor RtW8rWhB7trpfyfm9IC8Fow1

token_duration 8760h

token_renewable true

token_policies ["cosign"]

identity_policies []

policies ["cosign"]In this example we created a token with a TTL of 1 year (I really hope Kyverno will start supporting Kubernetes auth method soon). The token has to be orphan in order to not to depend on parent token TTL.

Save the long token value above, we will need it on the next step.

Log in to Kubernetes and go to the namespace where Kyverno is installed (in my case it’s kyverno). Now let’s create a secret containing a Vault connection details (Vault URL and Vault token above):

$ export VAULT_ADDR=<YOUR VAULT URL>

$ export VAULT_TOKEN=<YOUR VAULT TOKEN>

$ kubectl -n kyverno create secret generic kyverno-vault-integration --from-literal=VAULT_ADDR=$VAULT_ADDR --from-literal=VAULT_TOKEN=$VAULT_TOKENPrepare a patch for Kyverno admission controller deployment and apply the patch:

cat > patch.yaml <<EOF

spec:

template:

spec:

containers:

- name: kyverno

env:

- name: VAULT_ADDR

valueFrom:

secretKeyRef:

key: VAULT_ADDR

name: kyverno-vault-integration

- name: VAULT_TOKEN

valueFrom:

secretKeyRef:

key: VAULT_TOKEN

name: kyverno-vault-integration

EOF

$ kubectl -n kyverno patch deploy kyverno-admission-controller --patch-file patch.yaml

deployment.apps/kyverno-admission-controller patchedAlternatively if you installed Kyverno using Helm chart (which is the recommended way) you can include the following lines into your Helm values:

admissionController:

container:

extraEnvVars:

- name: VAULT_ADDR

valueFrom:

secretKeyRef:

key: VAULT_ADDR

name: kyverno-vault-integration

- name: VAULT_TOKEN

valueFrom:

secretKeyRef:

key: VAULT_TOKEN

name: kyverno-vault-integrationNow Kyverno has all it needs to authenticate to Vault.

GitLab pipeline

Proceeding to GitLab CI pipeline. I used the following pipeline snippet to build, push and sign the container image:

container-build:

stage: build

image: ghcr.io/angapov/kaniko-cosign

variables:

VAULT_AUTH_PATH: auth/gitlab/login

VAULT_AUTH_ROLE: cosign

COSIGN_KEY: hashivault://cosign

KANIKO_ARGS: --context=$CI_PROJECT_DIR --dockerfile=${CI_PROJECT_DIR}/Containerfile --digest-file=./digest

id_tokens:

VAULT_ID_TOKEN:

aud: https://gitlab.com

before_script:

- echo "{\"auths\":{\"${CI_REGISTRY}\":{\"auth\":\"$(echo -n ${CI_REGISTRY_USER}:${CI_REGISTRY_PASSWORD} | base64 -w0)\"}}}" > /kaniko/.docker/config.json

- export VAULT_TOKEN=$(vault write --field=token $VAULT_AUTH_PATH role=$VAULT_AUTH_ROLE jwt=$VAULT_ID_TOKEN)

script:

- /kaniko/executor ${KANIKO_ARGS} --destination=${CI_REGISTRY_IMAGE}:${CI_COMMIT_BRANCH}

- cosign sign ${CI_REGISTRY_IMAGE}@$(cat digest) --tlog-upload=falseThis snippet requires some explanations:

- Here we use Kaniko as an image builder. I had to build a custom image ghcr.io/angapov/kaniko-cosign (which is btw also signed by Cosign) that contains kaniko, cosign and vault binaries. Kaniko itself isn’t important here, you can replace it with Docker/Podman/Buildkit or anything you want.

- VAULT_AUTH_PATH is the Vault auth path for GitLab JWT auth method (see documentation). VAULT_AUTH_ROLE is the corresponding Vault JWT role. Same relates to

id_tokens— it’s used to authenticate GitLab to Vault. I didn’t explain how to set this up in the article. - COSIGN_KEY is the environment variable used by Cosign to determine which key should be used for image signing. In this case it uses Vault transit key named

cosign. - KANIKO_ARGS is the common set of args used by Kaniko. In this case I use

--digest-file=./digestin order to write image digest to a file where it can be read by Cosign on the next line. The reason is that I build an image with named tag (likemain)instead of digest. Cosign always expects to sign an image with a digest, not a tag, because the tag is mutable whereas the digest is not.

The fun part begins in the last line:

cosign sign ${CI_REGISTRY_IMAGE}@$(cat digest) --tlog-upload=falseWe’re signing the container image but we’re NOT uploading the signature record (--tlog-upload=false) into the public transparency log called Rekor (https://rekor.sigstore.dev).

Now, what exactly is Rekor and why I don’t wanna use it in this case? Rekor by definition is an “immutable, tamper-resistant ledger of metadata generated within a software project’s supply chain”. Rekor is very similar to TLS Certificate Transparency log (CTlog) which is the live public append-only log of all issued public TLS certs. In the context of Cosign, Rekor maintains public records of all data signed with Cosign, allowing for querying, verification, and auditing.

In my case I use Cosign to sign private container images for my company. It makes absolutely zero sense to upload private signatures to public storage. Although it’s completely possible to deploy a private Rekor instance and use it internally within the company.

Ok, we uploaded an image signature. But where the signature is actually stored? Here’s an example:

Image URL: registry.gitlab.com/testcompany/testimage@sha256:c73ce979afcfbbe03084fe77853e0e0e6b740247b0415eb503810edd9b2f5f9b

Image Signature URL:

registry.gitlab.com/testcompany/testimage@sha256-c73ce979afcfbbe03084fe77853e0e0e6b740247b0415eb503810edd9b2f5f9b.sig

As you can see, essentially, the signature is stored within the same image registry as the main image. The signature carries the identical image digest, with the addition of “.sig” appended to it. Very easy to remember!

Up to this moment we uploaded the image and the image signature. What’s next? Now let’s verify it in Kubernetes using Kyverno!

Kyverno

Let’s create the following Kyverno ClusterPolicy:

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: container-image-policy

spec:

validationFailureAction: Enforce

background: false

webhookTimeoutSeconds: 30

failurePolicy: Fail

rules:

- name: gitlab-container-registry-required

match:

resources:

kinds:

- Pod

namespaces:

- test

validate:

message: "Image must be from registry.gitlab.com/testcompany/* container registry"

pattern:

spec:

containers:

- image: "registry.gitlab.com/testcompany/*"

- name: verify-image-signature

match:

resources:

kinds:

- Pod

namespaces:

- test

verifyImages:

- imageReferences:

- "registry.gitlab.com/testcompany/*"

attestors:

- count: 1

entries:

- keys:

rekor:

ignoreTlog: true

url: https://rekor.sigstore.dev

kms: hashivault://cosignThis policy has validationFailureAction: Enforce which means it will deny any resource (in this case Pod) that doesn’t satisfy the rules. It also has background: false which means it doesn’t run in the background. It also has failurePolicy: Fail so that if Kyverno for any reason fails to respond to the request from kube-apiserver the latter will consider the request at failed.

match:

resources:

kinds:

- Pod

namespaces:

- test This tells Kyverno to apply policy only to Pods in the namespace test. We can omit namespaces, in this case the policy will have cluster-wide effect.

The policy defines two rules:

- First rule “gitlab-container-registry-required” makes sure that any Pod in “test” namespace is running container image from

registry.gitlab.com/testcompany/*container registry. Any other Pod will be denied. - Any image from

registry.gitlab.com/testcompany/*registry is subject to signature validation. Signature provider iskms: hashivault://cosignand withignoreTlog: truewe don’t search for log record in Rekor.

Let’s test the policy by running the image from unstrusted registry:

$ kubectl run test --image ubuntu

Error from server: admission webhook "validate.kyverno.svc-fail" denied the request:

resource Pod/test/test was blocked due to the following policies

container-image-policy:

gitlab-container-registry-required: 'validation error: Image must be from registry.gitlab.com/testcompany/*

container registry. rule gitlab-container-registry-required failed at path /spec/containers/0/image/'Now let’s try the image from the trusted registry but without the signature:

$ kubectl run test --image registry.gitlab.com/testcompany/testimage:test

Error from server: admission webhook "mutate.kyverno.svc-fail" denied the request:

resource Pod/test/test was blocked due to the following policies

container-image-policy:

verify-image-signature: 'failed to verify image registry.gitlab.com/testcompany/testimage:test:

.attestors[0].entries[0].keys: no matching signatures'Finally let’s run the image that has the proper signature:

$ kubectl run test --image registry.gitlab.com/testcompany/testimage:main

pod/test createdIt worked!

Conclusion

Cosign is a very powerful tool that enhances the supply chain security of your applications. Overall, Sigstore project offers several other cool products and features like:

- Cosign supports various encryption providers like self-managed keys, AWS KMS, Azure Key Vault, GCP KMS and even Kubernetes secrets!

- Keyless signing using OIDC and Fulcio. It allows to sign the data with short-lived x509 certificates using OIDC provider like Google, Microsoft, Github and so on. The benefit is that you don’t have to store the encryption keys.

- Sigstore has its own policy controller that can verify container images in Kubernetes. It has some small advantages over Kyverno, but Kyverno for me looks powerful enough and more universal.

What I’m really missing in Kyverno is the ability to define custom image signature registry which is supported by Cosign. That will allow to store the signatures in a dedicated registry and avoid creating a big mess in the main image registry. The support for it is planned to be added in Kyverno 1.13 release.